Fixing noisy neighbor problems in multi-tenant queueing systems

Ensuring fairness and consistent performance for all users with concurrency controls

Dan Farrelly · 6/28/2024 · 7 min read

Multi-tenant queueing systems are complex to manage and prone to noisy neighbor problems. High volume users can monopolize resources, causing delays and perceived performance degradation for other users. Systems need to ensure fairness and consistent performance for all users, regardless of their usage levels.

There are several approaches to solving this problem, but many require complex infrastructure management and custom worker logic.

In this post, we'll walk through these approaches and how Inngest is purpose-built to handle multi-tenant queueing systems without the overhead and complexity.

If you're a visual learner, check out the video:

Noisy neighbors and queueing systems

In multi-tenant systems, users share the same infrastructure resources. A "noisy neighbor" refers to a high-usage user consuming excessive resources, thereby affecting other users' performance.

In queueing systems, a noisy neighbor can monopolize a queue (or multiple queues), causing other users to wait longer for their jobs to be processed. This can lead to delays and frustration for users with lower usage levels. In these systems it's important to ensure fairness and consistent performance for all users, regardless of their usage levels.

Here is a visualization of a single user monopolizing a first-in-first-out (FIFO) queue:

As we can see in this visualization, the high-usage user is causing delays for other users.

Ensuring fairness in multi-tenant systems

The goal in building multi-tenant systems is to provide a fair and consistent experience for all users. Without considering fairness, users may confuse slow processing times with poor system performance.

Here is a visualization of a fair queueing system where each user receives equal processing time:

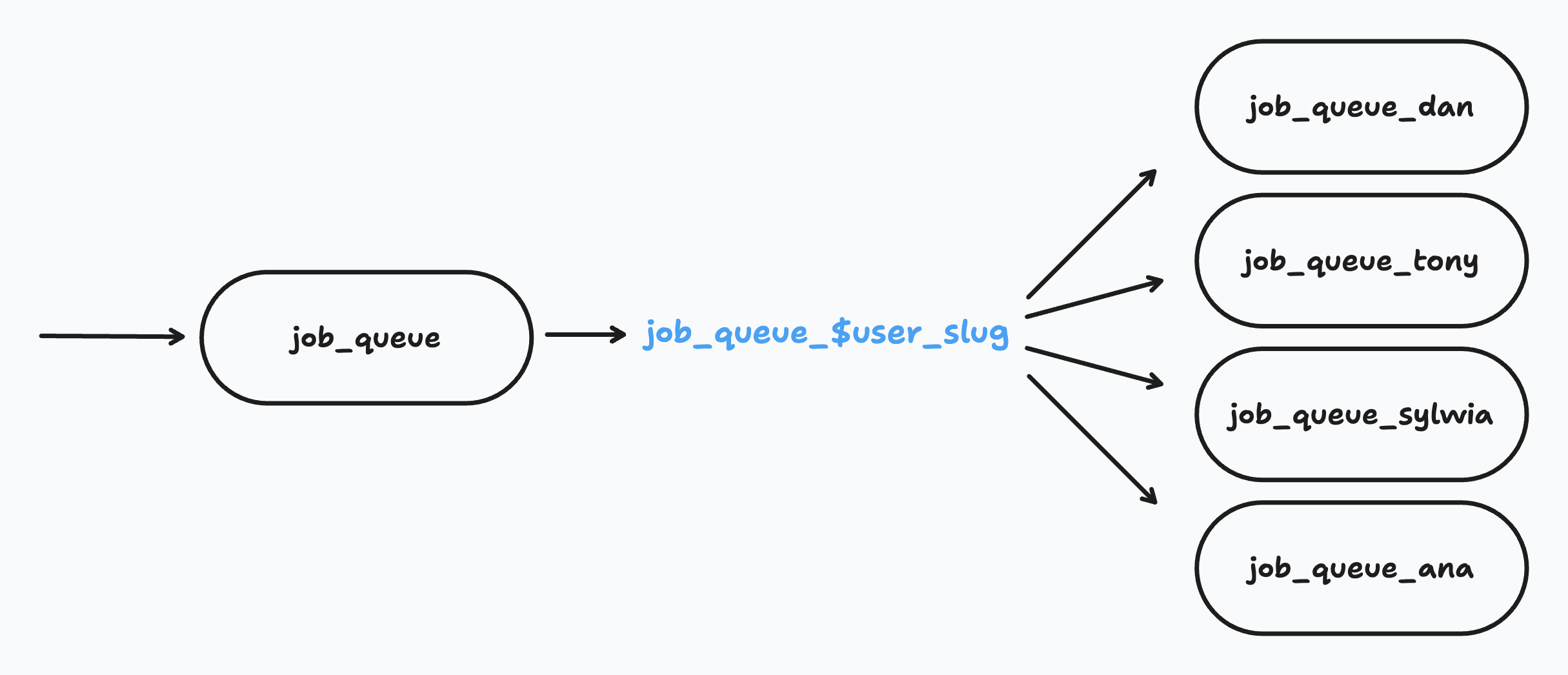

Achieving fairness - A queue per user

An ideal approach to fairness is to provide a queue per user. This way, each user's workloads are isolated, preventing any single user from affecting others.

This approach introduces several challenges:

- Infrastructure overhead and costs: Creating and maintaining thousands of individual queues is cumbersome and potentially very expensive.

- Worker complexity and performance: Workers need to be designed to scan and pull from multiple queues intelligently to prioritize work effectively. It's not likely that you can afford a worker-per user at scale so workers need to be able to handle multiple queues and re-adjust as more users are added to your system. If not done well, this can lead to poor performance for all users.

- Queue configuration and management: Most traditional message queues require pre-configuring your queues either in infrastructure-as-code or programmatically. This may work for a small number of users, but it's not practical at scale. Additionally, you'll need some sort of state management to keep track of all these queues.

- Hybrid of multi- and single-tenant architecture: Managing a queue per user represents a shift towards a single-tenant architecture which may be a significant departure from the existing architecture of your multi-tenant system. This can lead to additional architectural complexity which adds to overhead of the engineering team that is maintaining it.

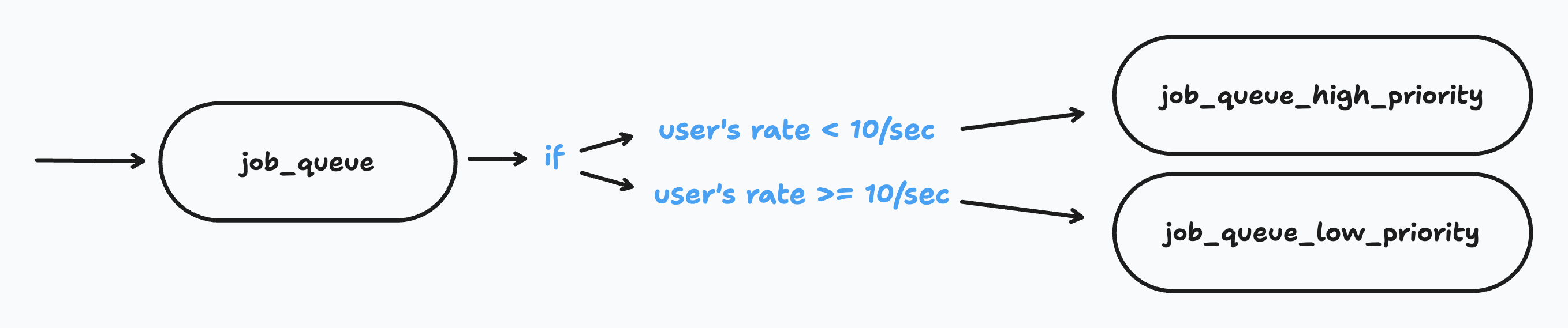

Alternative approaches - Priority queues

An alternative approach might be to use low/high priority queues. As usage increases for a particular user, they are moved to a lower priority queue. This approach can help to ensure that high-usage users don't monopolize resources.

This approach can be effective, but has other challenges:

- Implementing rate limiting: The system needs to track the rate of jobs for each user, for every queue. This is similar to API endpoint rate limiting, but requires additional infrastructure and logic to manage.

- Shifting the problem to the low priority queue: If a user is moved to a low priority queue, they may still flood that queue, causing delays for other users who just narrowly exceed the defined rate.

- Fine-tuning the system for efficiency: Rate limits and worker capacity for high and low priority queues will have to be tuned to ensure that the system is efficient and fair. This takes additional time and must be monitored and adjusted as usage patterns change.

Inngest: Concurrency limits for each tenant

The Inngest platform has built-in support for easily defining concurrency limits that are automatically applied for each tenant.

Compared to approaches that have to combine queue infrastructure management, custom worker logic, and state management, Inngest manages all infrastructure and has embedded flow control logic within the queue itself. This allows your system to have the best of both worlds: the simplicity of a multi-tenant system with the fairness of a single-tenant system.

How it works

Concurrency controls are defined within each Inngest function configuration in just a couple lines of code. Here's how it works:

- You define the concurrency

limitin your function configuration. - Specifying a concurrency

keywill apply this limit to each unique value of the key. This key can be anything relevant to your system, such as user ID, organization ID, email, workspace ID, etc. - As new runs (aka jobs) are created, Inngest dynamically creates a new queue for each unique key value. The system internally handles the complexity of managing these queues, and consuming work efficiently off of each queue so you don't have to.

Let's take a look at some example code:

export const multiTenantConcurrency = inngest.createFunction({id: 'multi-tenant-concurrency',concurrency: [{limit: 1,// Add a key to apply the concurrency limit to each unique user_slugkey: 'event.data.user_slug',},],},{ event: 'demo/job.created' },async ({ event, step }) => {/* function logic omitted for example */return { status: 'success' };});

Going further - Combining concurrency limits

Inngest allows you to "stack" or combine concurrency limits. This means you can limit the concurrent items for individual users while also setting a total concurrency limit for all users. This provides a flexible way to manage resources. Let's take a look at a function that limits the concurrency to 5 per user, but also limits the total concurrency to 100:

export const combinedConcurrencyLimits = inngest.createFunction({id: 'multi-tenant-concurrency-with-shared-limit',concurrency: [{// Each unique user_slug has a concurrency limit of 5limit: 5,key: 'event.data.user_slug',},{// Overall concurrency for all users is limited to 100limit: 100,},],},{ event: 'demo/job.created' },async ({ event, step }) => {/* function logic omitted for example */return { status: 'success' };});

Further Flexibility

Beyond using keys and combining concurrency limits, for additional control within your system, you might also consider:

- Setting concurrency limits that are shared across functions - Concurrency limits can be shared across multiple functions by specifying a

scopewhich allows to combine limits across functions. - Combining with other flow control methods - Inngest's concurrency limits can be combined with other methods like throttling and prioritization, giving you even more control over your system's workload management.

Give it a try

Managing queues in multi-tenant systems can be complex, but with Inngest's built-in multi-tenant-aware concurrency controls, you can ensure a fair, efficient, and smooth experience in your applications. Learn more about how to implement concurrency limits in your functions by checking out the Inngest Concurrency Documentation.

Help shape the future of Inngest

Ask questions, give feedback, and share feature requests

Join our Discord!